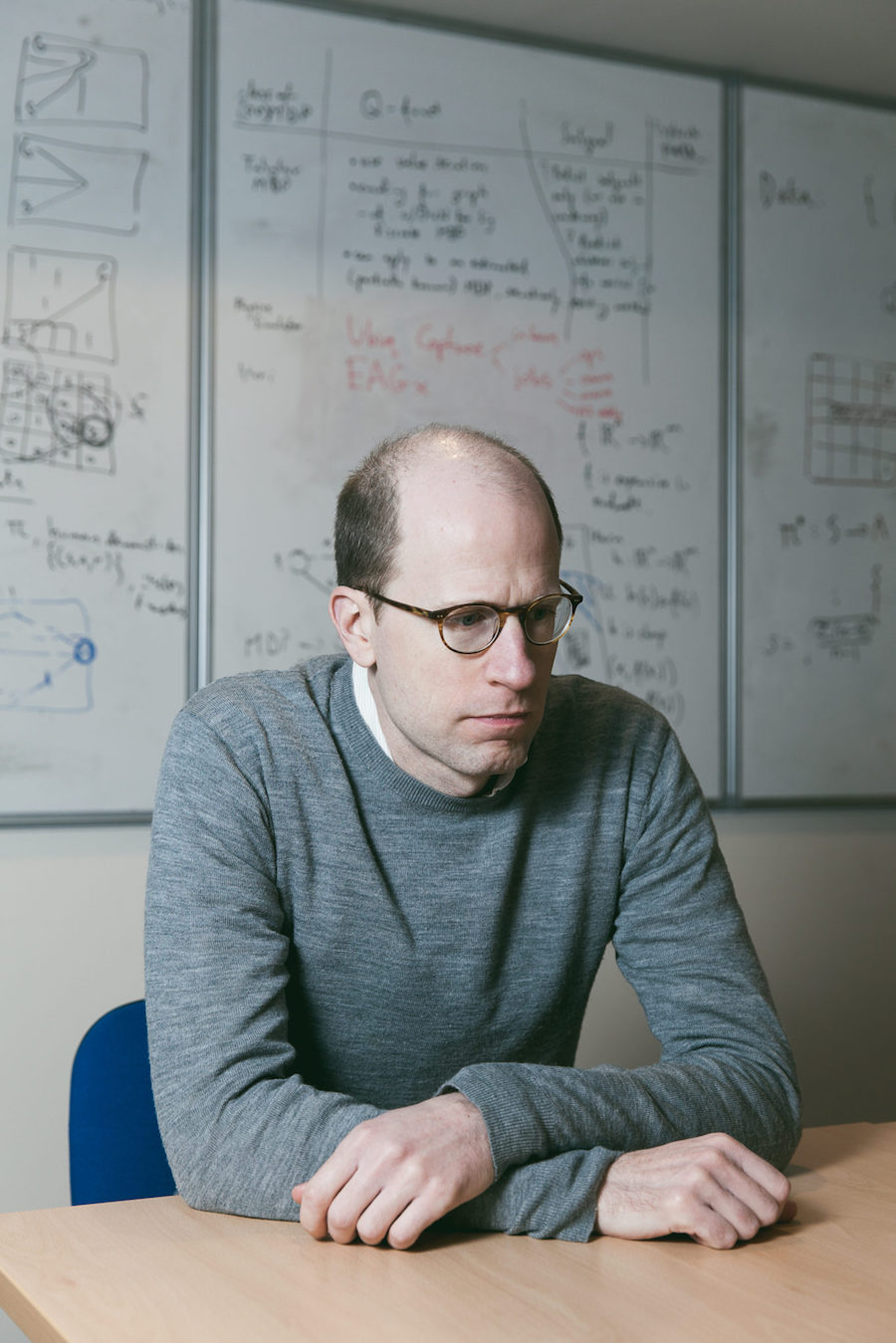

The Swedish philosopher is one of the most influential in the field of superintelligence. He is the director of the Future of Humanity Institute at the University of Oxford. Transhumanism explores the possibility of improving physical, emotional and cognitive human condition through scientific and technological progress. I speak about this intellectual, scientific and cultural movement with philosopher Nick Bostrom (Sweden, 1973), founder of the World Transhumanist Association together with David Pearce and one of the most influential thinkers in the field of superintelligence. He is also the director of the Future of Humanity Institute and the Governance of Artificial Intelligence Program at the University of Oxford. He is the author of more than 200 publications, "Human Enhancement" and "Superintelligence: Paths, Dangers, Strategies", bestseller of the “New York Times” and strongly recommended by Bill Gates and Elon Musk.

Author: Elena Cué

Nick Bostrom. Photo: Allen McEachern

The Swedish philosopher is one of the most influential in the field of superintelligence. He is the director of the Future of Humanity Institute at the University of Oxford.

Transhumanism explores the possibility of improving physical, emotional and cognitive human condition through scientific and technological progress. I speak about this intellectual, scientific and cultural movement with philosopher Nick Bostrom (Sweden, 1973), founder of the World Transhumanist Association together with David Pearce and one of the most influential thinkers in the field of superintelligence. He is also the director of the Future of Humanity Institute and the Governance of Artificial Intelligence Program at the University of Oxford. He is the author of more than 200 publications, "Human Enhancement" and "Superintelligence: Paths, Dangers, Strategies", bestseller of the “New York Times” and strongly recommended by Bill Gates and Elon Musk.

What led you to create the World Transhumanist Association?

Well, it happened in the 90s. At that time, it seemed to me that there was no adequate forum to discuss the impacts of the emergence of future technologies and how they may affect the human condition. Back then, the emphasis was mainly on the negative. Most of the relevant topics were not discussed at all and the few discussions on academic bioethics always focused on the inconveniences, such as possible dehumanization by using technology to enhance human capacities. There needed to be another voice. The association was an attempt to create a platform which fulfills this demand.

And what about today?

I have not been involved for many years. In the early 2000s, these problems found a voice and were developed in the academic field. Thus, the organization was no longer a necessity.

There is talk about artificial intelligence attempting to develop a conscious intelligence in order to learn in the same way humans do. What can you tell us about this?

I think that a lot of the excitement over the last eight years is due to advances in deep learning; which is a particular focus of AI. This way of processing information is, in many ways, similar to how our human mind works. Excitement is created because it seems to be a more "general" way of structuring intelligence, a type of algorithm that has the general ability to learn from data (big data), learn from experience, and build representations from a pattern present in such data that has not been explicitly pre-programmed by humans. This new concept points to Artificial General Intelligence (AGI).

Can you give an example?

The same algorithm that can learn to play one Atari game can learn to play other Atari games. With small modifications, it can learn to play chess, Go, how to recognize cats in images and to recognize speech. Although there are limits to what can be done today, there are indications that we might be reaching the type of mechanism that provides similar flexibility to that of human intelligence, a type of general learning capacity.

Some voices are more skeptical.

If you look at the systems that are used in the industry, they are still a kind of hybrid. In some cases, these modern deep learning systems are specifically used for image and voice recognition, but many other systems employed by companies continue to be mainly expert systems with a domain specific purpose while following the old school model. This contributes to the confusion.

When do you think molecular nanotechnology will exist, making it possible to manufacture tiny machines that could be inserted into our organisms and thus eradicate many diseases and prolong life?

It's a good question... I believe that nanotechnology will probably be viable after the development of artificial superintelligence. The same goes for many other advanced technologies, which could be developed by using superintelligence for research and development. However, there could be a scenario in which molecular nanotechnology is developed before artificial intelligence takes off. In that case, the first applications would present risks that we would have to survive and if we succeed, we would have to survive the risks associated with superintelligence, once it is developed. Therefore, if we were to be able to influence the order of the development of both technologies, the ideal goal would be to obtain superintelligence before molecular nanotechnology.

Another way to prolong life would be through cryonics. More than 300 people have their bodies cryogenically frozen or their brains preserved in nitrogen. You registered for this service, what prompted you to do so?

Actually, in the interactions I have had with the media, I have never confirmed I did. My stance has always been that my funeral arrangements are a private matter. I know there have been speculations in a newspaper two years ago... It is also true that some of my colleagues are cryonics clients, and they have made it public, like Dr. Anders Sandberg, who is one of our researchers here.

With cultural diversity and different moral codes worldwide, how can we reach a global consensus on ethical limits in genetic research?

For the moment, humanity does not really have a single coordinated plan for the future. There are many different countries and groups, each one pursuing its own initiatives.

From what you say, foresight is essential.

If you think about the development of nuclear weapons 70 years ago, it turned out it was hard to make a nuclear bomb. You need highly enriched uranium or plutonium, which are very difficult to get as you would need industrial sized power plants in order to make them. In addition to that, a huge amount of electricity is needed, so much so that you could see it from space. It is a hard process. It is not just something you could do in your garage. However, let us suppose that they had discovered an easy way to unleash the energy of the atom; that might then have been the end of human civilization as it would have been impossible to control.

It is worrying to think.

Yes, it would be in these cases that perhaps humanity would have to take steps to have a greater capacity for global coordination in case such vulnerability arises or a new arms race emerges. The more powerful our technologies are, the greater the amount of damage we can cause if we use them in a hostile or reckless manner. At this point, I think this is a great weakness for humanity and we just hope that the technologies we discover do not lend themselves to easy, destructive applications.

Recent studies have shown that graphene can effectively interact with neurons. What do you think about the advances in the development of brain-machine interfaces (communication zones) that use graphene?

It is something exciting from the point of view of medicine and people with disabilities. For patients with spinal cord damage, it has several promising applications such as the neuro-prosthesis. However, I am slightly skeptical that it enhances the functionality of a healthy person sufficiently to make it worth the risks, pains and problems of a surgical intervention.

Do you think it is not worth it for a healthy person?

It is quite hard to add functionality to a healthy human mind that you could not get, in a similar way, by interacting with a computer outside of your body, simply typing things on a keyboard or receiving inputs through your eyeballs by looking at a screen. We already have high bandwidth input and output channels to the human brain, for instance, through our fingers or through speech. It is much more relevant to find a solution to how to access, organize and process the vast amount of information available with the limitations of the human brain. That is the bottleneck of the problem and it is where the focus of the development should lie.

In your book Superintelligence you comment that the artificial intelligence research project begins at Dartmouth College in 1956. Since then there have been periods of enthusiasm and regression. At present, it seems that the biggest advocate for the creation of a post-human AI is Ray Kurzweil, founder of Singularity University and financed by Google. Do you think they will achieve their objective this time?

I do not think Ray Kurzweil is the leader in research of AI. There is a big global research community with many important people making significant contributions and I do not think he plays a significant part in most of them.

Would such research be aimed at replicating the human brain including consciousness?

Artificial intelligence is mainly about finding ways to make machines solve difficult problems. Whether they do so by emulating, assimilating, or drawing inspiration from the human brain or not, is more of a tactical decision. If there are useful insights that can be extracted from neuroscience, they will be taken advantage of, but the main objective is not to try to replicate the human mind.

I thought a posthuman project already existed…

If you are referring to the Human Brain Project, then yes. It might be a little bit closer to trying to emulate various details and levels of the human condition. I have the impression that it initially began with a very ambitious vision and probably excessive expectations, with very detailed models of a cortical column. However, after several dissonant voices, it has become a funding channel for several neuroscience projects.

You mentioned that with superintelligence (on a human level) we can obtain great results but at the risk of human extinction.

I think superintelligence would be a kind of general-purpose technology, because it would make it possible to invent other technologies. If you are super intelligent, you can do scientific or engineering work much faster and more effectively than human scientists and engineers can. So imagine all the things that humans could achieve if we had 40,000 years to work on them; perhaps we would have space colonies, upgrades in our organism, cures for aging and perfect virtual realities. I think all of these technologies, and others we have not thought of yet, could be developed by machines of superintelligence and perhaps within a relatively short amount of time after its arrival. It gives us an idea of the vast amount of potential benefits.

What would be the “existential risks” we would face with artificial intelligence?

I see two types of threats. The first one arises from a failure to align objectives. You are creating something that would be very intelligent and powerful at the same time. If we are not capable of finding a way to control it, we could give rise to the existence of a super intelligent system that might prioritize attaining its own values to the detriment of ours. The other risk is that humans use this powerful technology in a malicious or irresponsible way, as we have done with many other technologies throughout our history. We use them not only to help each other or for productive purposes, but also to wage wars or to oppress each other. This would be the other big threat with such advanced technology.

Stephen Hawking called for “expansion to space” and Elon Musk’s company Space X expects to send people to Mars in the near future. What do you think are the reasons we will be forced to migrate to other planets? Could humans live on Mars?

At this moment in time, Mars is not a good place to live. A meaningful space colonization will happen after superintelligence. In the short term, it seems very unattractive; it will be easier to create a habitat at the bottom of the sea or on top of the Himalayas than to do it on the Moon or Mars. Until we have exhausted this type of places, it is difficult to see the practical benefit of doing so on Mars. However, in the long term, space is definitely a goal; Earth is a small crumb floating in an almost infinite expanse of resources.

Nick Bostrom